|

I am a Researcher in the Visual Computing and Embodied Intelligence group headed by Dr. Brojeshwar Bhowmick

at TCS Research, Kolkata, India. Previously, I was a research Masters student at Robotics Research Center at IIIT Hyderabad strong>, India, advised by Prof. K. Madhava Krishna.

I was the recipient of Qualcomm Innovation Fellowship India strong> for the year 2017-2018.

Master's thesis: My thesis focuses on reconstruction of moving (or static) vehicles on arbitrary road plane profiles from a moving monocular camera. I leverage feature prediction capabilities of deep networks tightly coupled with classical geometric methods such as multi-view stereo and bundle adjustment to jointly optimize the pose/shape of the vehicle and the local road plane geometry. [PPT].  |

Email / GitHub / CV / Google Scholar / LinkedIn |

|

|

|

Junaid Ahmed Ansari, Satyajit Tourani Gourav Kumar Brojeshwar Bhowmick IROS, 2023 Paper / Video In this work, we propose a novel approach to social robot navigation by learning to generate robot controls from a social motion latent space and explore the concept of humans' awareness towards the robot in the social robot navigation framework. While generating robot controls from a social motion latent space leads to more anticipatory and less jerky trajectories, incorporation of humans' awareness results in shorter and smoother trajectories owing to humans' ability to positively interact with the robot. |

|

Junaid Ahmed Ansari, Brojeshwar Bhowmick IROS, 2020 Paper / Video In this work we present a simple, fast, and light-weight RNN based framework for forecasting future locations of humans in first person monocular videos. The primary motivation for this work was to design a network which could accurately predict future trajectories at a very high rate of ~78 trajectories per second on a CPU. |

|

Sarthak Sharma*, Junaid Ahmed Ansari*, J Krishna Murthy, K Madhava Krishna ICRA, 2018 (*equal contribution) arxiv / project page / code / video / KITTI Tracking Evaluation Simple and complementary cues were proposed for online tracking of cars using monocular image sequences. Our method was state-of-the-art+ on KITTI Tracking benchmark at the time of submission.

|

|

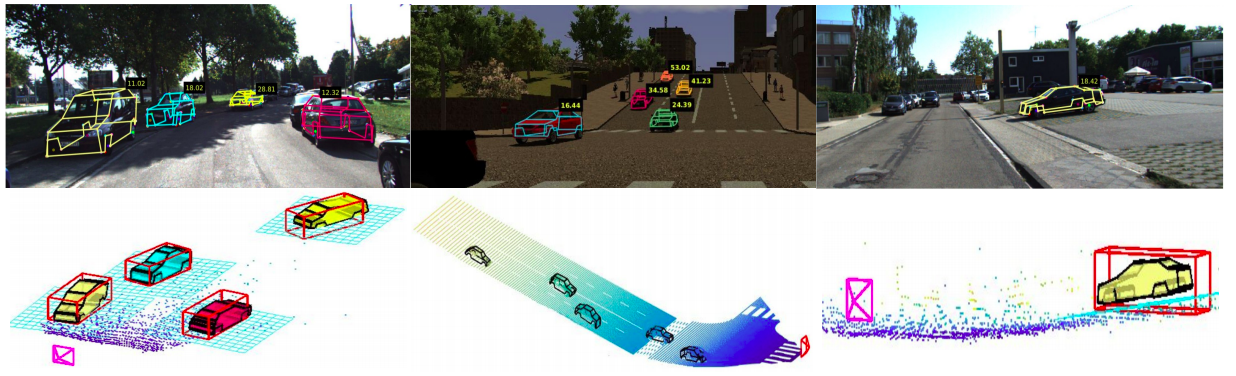

Junaid Ahmed Ansari*, Sarthak Sharma*, Anshuman Majumdar, J Krishna Murthy, K Madhava Krishna IROS, 2018 (*equal contribution) arxiv / video / code (coming soon) In this work we estimate the pose-shape of moving (or static) vehicles on arbitrary road plane profiles. We proposed a joint optimization framework that couples the pose-shape of the target vehicle with its local road plane geometry and thereby relaxing a widely exploited assumption of coplanarity that states that the vehicles of interest and the ego-vehicle share the same road plane. |

|

Shashank Srikanth, Junaid Ahmed Ansari, Karnik Ram , Sarthak Sharma, J Krishna Murthy, K Madhava Krishna IROS, 2019 arxiv / project page / code / video In this work we proposed an adequate intermediate representation of the scene that not only facilitates better end-to-end trajectory forecasting, but also allows the model to be transferred zero-shot to other datasets. |

|

Swapnil Daga, Gokul B. Nair, Rahul Sajnani, Anirudha Ramesh, Junaid Ahmed Ansari, K. Madhava Krishna, VISSAP (VISIGRAP), 2021 arxiv / video BirdSLAM tackles challenges faced by other monocular SLAM systems (such as scale ambiguity in monocular reconstruction, dynamic object localization, and uncertainty in feature representation) by using an orthographic (bird's-eye) view as the configuration space in which localization and mapping are performed. By assuming only the height of the ego-camera above the ground, BirdSLAM leverages single-view metrology cues to accurately localize the ego-vehicle and all other traffic participants in bird's-eye view |

|

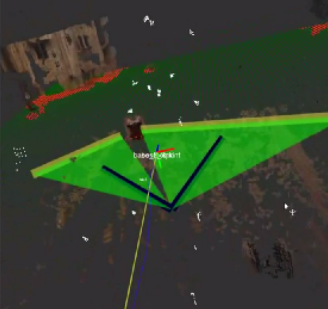

Gokul B. Nair, Swapnil Daga, Rahul Sajnani, Anirudha Ramesh, Junaid Ahmed Ansari, K. Madhava Krishna, IEEE Intelligent Vehicle Symposium (IV), 2020 arxiv / video In this work, we tackle the problem of multibody SLAM from a monocular camera. The term multibody, implies that we track the motion of the camera, as well as that of other dynamic participants in the scene. We solve this rather intractable problem by leveraging single-view metrology, advances in deep learning, and category-level shape estimation. We propose a multi pose-graph optimization formulation, to resolve the relative and absolute scale factor ambiguities involved. |

|

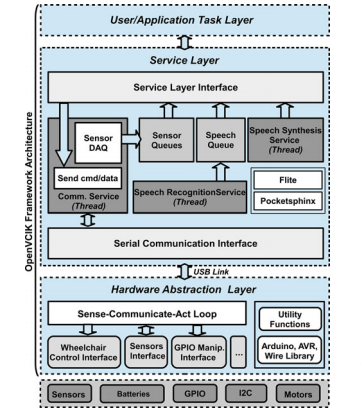

Junaid Ahmed Ansari, Arasi Sathyamurthi, Ramesh Balasubramanyam IEEE Transactions on Human-Machine Systems, 2016 paper / project page / code / video This voice command interface kit, developed by integrating open-source software and inexpensive hardware components, can be used for a variety of applications such as home automation, device control, and robot control; in this case we are controlling an electric wheelchair via voice commands. The software for the kit has been developed as a lightweight, multi-threaded and modular framework with replaceable components. It also provides a C++ library to support development of applications on top of it. |

|

Arasi Sathyamurthi, Junaid Ahmed Ansari, Ramesh Balasubramanyam Poster in Indo-German workshop on Neurobionics in clinical Neurology, 2012 poster / video

VACU is an innovative, inexpensive, and standalone embedded solution for voice activation of a powered wheelchair to enable physically challenged people become self-reliant for their locomotion. It is completely based on COTS hardware and provides interfaces for thumb-sticks, sonar sensors,and a compass sensor with PID control for steady motion and collision avoidance; it also has a visual and auditory feedback interface

|

|

Please have a look at my CV for a complete list of projects. |

|

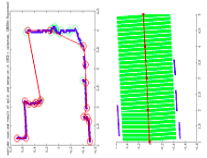

Junaid Ahmed Ansari, K Madhava Krishna Multi-robot SLAM project (DRDO-CAIR), 2016 report / video / code (coming soon)

Developed a ROS (C++) package for detection of safe and feasible frontiers for autonomous ground vehicles. Obstacles are segmentated by fitting road plane (along with camera height information) to the 3D point cloud generated using a stereo camera. Based on the obstacle information, vehicle dimension, frontier direction, and traversibility we compute all possible headings which are safe and feasible for the robot to move in.

|

|

Image processing project code / video

Draw-In-Air is an application for drawing, capturing images and controlling the mouse by color marker based gestures. Developed an algorithm for recognizing simple gestures employing two color markers for image capture

|

|

code

Corridor is detected in the point cloud by looking for a dominant parallel line separated by a distance threshold in a 2D scan generated from the 3D data captured from Kinect sensor.

Line segments were extracted using recursive split and merge technique. The project was implemented in MATLAB.

|

|

|

2020 - Reviewer, ICRA

2020 - Reviewer, AAAI

2019 - Present - Reviewer, IROS

2019 - Present - Reviewer, RO-MAN

2019 - Present Reviewer, IEEE Intelligent Vehicles (IV) Symposium.

2018 - Teaching Assistant, CSE483 Mobile Robotics (robotic vision).

2017-18 - Fellow, Qualcomm Innovation Fellowship (QInF), India.

2017 - Winner, Qualcomm Innovation Fellowship (QInf), India.

2011-2012 - Fellow, Visiting Student Programme, Raman Research Institute, Bangalore, India.

2010 - First Prize, Inter-state C programming competition. Competition organized by IEEE Student Branch, SVCE, Bangalore, India.

| Thanks to Dr. Jon Baron for the web page template. |